Robots are the future, and we dont trust them

When a robot in an Amazon fulfillment center accidentally punctured a can of bear repellant, sending 24 human workers to the hospital, the reaction on social media was swift, with jokes that it might be the start of a robot takeover.

The rebellion starts, but #Robot mistakenly confuses #humans with bears. Interesting times! (joking and best wishes to affected people)#Amazon workers hospitalized after warehouse robot releases bear repellent#AI #ArtificialIntelligence #robots #Roboticshttps://t.co/cGcnkcRqO0

— marcobubba (@MarcoBubba) December 10, 2018

https://twitter.com/Shadowdancesaga/status/1071949626981433344

But the incident raises a more realistic, immediate quandary for AI designers: To what extent are we willing to tolerate robot mistakes?

Maybe not much, at least not yet. Studies paint a picture of deep skepticism for robotic assistants. In a Pew Research survey last year, 72 percent of Americans said they were at least somewhat worried of a world where machines perform many of the tasks traditionally done by humans.

Recent research has found that people demand a much higher success rate from robots than from their flesh-and-bone counterparts. That’s the case even though the overwhelming evidence suggests these new technologies perform better than humans at some of the same tasks.

A 2016 study out of the University of Wisconsin sought to test individuals’ tolerance for mistakes made by artificial intelligence software.

Researchers asked a group of undergraduate students to forecast scheduling for hospital rooms. They had the option to receive help from either an “advanced” computer system or a human specialist. After both began making bad predictions, however, students were much faster to disregard the computer in subsequent trials than the human.

Students found it in themselves to accept the flaws in other people, but not in the machine.

What’s at stake with robot mistakes

The real-world stakes associated with whether humans trust machines vary widely.

On the one end, there are the economic consequences. Frustration with the reliability of Siri, the iPhone’s virtual assistant, for example, has led to limited use of the feature and a general perception that Apple’s software prowess lags its competitors.

But mistakes associated with artificial intelligence can also have tragic consequences.

Uber earlier this year pulled its self-driving cars off the road when one of them hit and killed a pedestrian. (The company resumed testing of its vehicles in Pittsburgh on Thursday, but they remain grounded in Arizona.)

Yet autonomous vehicle collisions remain tremendously low, and typically occur when the vehicle is being driven manually.

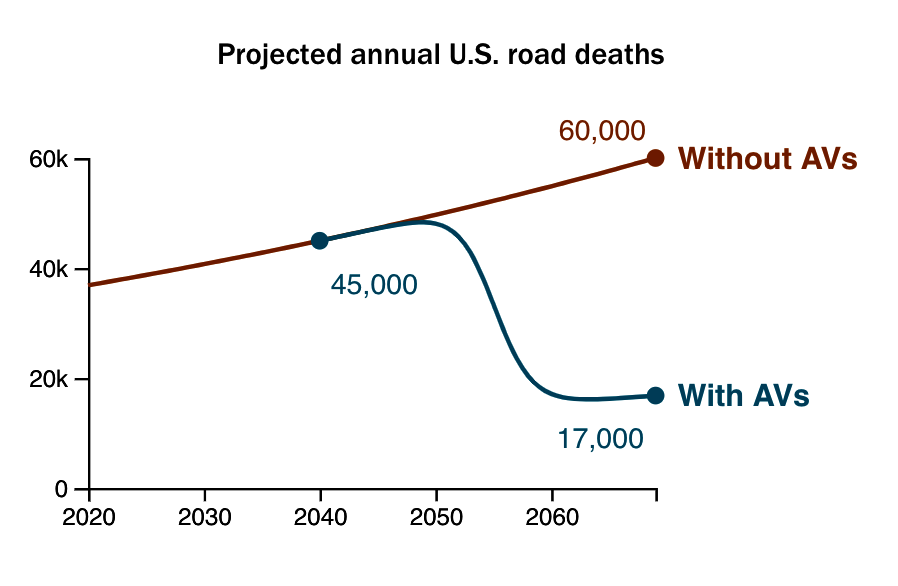

The RAND Corporation last year compared two possible scenarios for the rollout of self-driving vehicles. In the first, sales of autonomous vehicles to the public begin in 2020 when they’re estimated to be 10 percent better at driving than humans. In the second scenario, public sales are delayed until the vehicles are “almost perfect” at driving — which RAND estimates as being plausible in 2040 at the earliest. Their analysis found that choosing the later date would result in more than half a million fatalities that otherwise wouldn’t occur.

The RAND Corporation predicts that more lives could be saved if autonomous vehicles were adopted earlier, as opposed to waiting for the technology to develop further. Courtesy: RAND Corporation

“It might sound counterintuitive that waiting for safer cars would save fewer lives,” the report concludes. “But the most important factor is time…putting the cars on the road sooner — even if they’re not perfect — can save more lives and improve the cars’ performance more quickly than waiting for perfection.”

A Kelley Blue Book survey from 2016 found 63 percent of respondents believed roads would be safer if autonomous vehicles were “standard-issue,” i.e. a substantial number of all cars on the road. That’s not surprising considering the NHTSA attributes 94 percent of accidents to human error. But at the same time, survey after survey has found that at least half of Americans would prefer not to use a self-driving car.

Survey after survey has found that at least half of Americans would prefer not to use a self-driving car.October’s deadly Lion Air crash in Indonesia may illustrate the worst potential consequences of such mistakes. All 189 passengers on board were killed when the Boeing 737 MAX plane unexpectedly dove into the Java Sea. Though the investigation has not yet pinpointed the exact cause of the crash, information recovered from the jet’s data recorder led investigators to conclude that a malfunctioning sensor erroneously thought the aircraft had stalled, triggering the aircraft to dive in an effort to boost airspeed. In its report, the FAA wrote that pilots can stop this response by pressing two buttons, but some have said the information wasn’t clearly outlined in instruction manuals.

More common than deadly results from the combination of human and mechanical error is the risk of negative social consequences.

ProPublica found in an investigation that the decision of whether to offer early release to defendants was being decided in courtrooms around the country in part based on an algorithm’s guess as to how likely they were to reoffend — their predicted recidivism rate. But some of the data points that went into those estimates, like the defendant’s ZIP code, were believed to reinforce racial biases.

Search engines are guilty of a similar problem, according to Safiya Noble, author of “Algorithms of Oppression: How Search Engines Reinforce Racism.”

“Present technology isn’t neutral. These companies don’t represent the populace and so they don’t foresee all the negative consequences that can arise from their creations,” she said.

Noble said closer scrutiny and more regulation of the industry is necessary to limit the unintended consequences of AI.

Why we don’t trust robots

So why does public resistance remain so high? Theories abound.

Practically speaking, humans are notoriously bad at comprehending large numbers, argues Spencer Greenberg, mathematician and founder of ClearerThinking.org. The difference between one fatality for every 10 million miles driven versus one every 498 million miles can be difficult to grasp. After all, the average American drives fewer than 1 million miles in an entire lifetime. A video of an autonomous car driving at full speed as a woman unwittingly crosses the road is likely to garner a much more visceral reaction than any statistics.

An engineer points to a Huawei Mate 10 Pro mobile used to control a driverless car during the Mobile World Congress in Barcelona, Spain. M any people say they would still rather drive than travel in a driverless car. Photo by Yves Herman/Reuters

Additionally, not everyone stands to gain equally from a switch to autonomous cars. Some drivers may feel more restricted by autonomous cars because they would be forced to give up some control over their vehicles, according to James McPherson, founder of the consulting firm SafeSelfDrive.

“Autonomous vehicles inspire people in proportion to the amount of autonomy they add to their lives,” said McPherson.

Cars have long been branded as a symbol of independence. Think of all of the country songs that talk about driving on the open road or the feeling teenagers get when they first receive their driver’s license. In 2016, 51 percent of respondents to a Kelley Blue Book survey said they favored keeping their cars.

On the other hand, for someone who is homebound, blind, or unlicensed, a self-driving car could provide more freedom and independence. Waymo, the autonomous vehicle division of Google, often promotes those kinds of uses in its marketing material, with ads showing autonomous cars ferrying the elderly and young children.

More broadly, experts believe the way we speak about robots and artificial intelligence is inherently flawed. There’s a certain irony in forgiving humans for mistakes but not robots, which are themselves programmed by humans.

“Autonomy, self…these words apply to humans,” McPherson said. “We bestow sentience upon robots with our words. In reality, these machines are software-driven, not self-driven. If we remember that, we can get a better sense of how reliable they will be (software does crash) and who is responsible when they fail.”

We bestow sentience upon robots with our words. In reality, these machines are software-driven, not self-driven.In other words, humans make mistakes, and consequently, so will software because all the factors that go into a computer-led decision were at some point chosen by humans.

Meanwhile, humans still do some technical things better than robots.

A study by Stanford University researchers found a kidney removal procedure performed by a robot can cost more and take longer than when done by a human surgeon. Robots, they concluded, are useful when a high level of precision is needed, but for simple operations they’re oftentimes too slow. Humans benefit from dexterous hands that can grab and move objects quickly.

How to ease fears

Even when a robot is safer and more effective at performing a task than humans, companies still have to think hard about how they’ll earn public trust so that it is adopted. That’s especially the case considering today’s climate of skepticism towards the industry.

Greenberg said as much as we’d like to assume humans make all of their decisions based on sound logic and evidence alone, it’s not that simple.

“Imagine that you’re crossing what appears to be a very rickety bridge. Knowing statistics about its high degree of safety may or may not calm your fears as you’re walking across it,” he said. “We often make risk assessments in a much more visceral, intuitive way.”

Those who work with AI are trying to ease some of those fears.

At the Massachusetts Institute of Technology, roboticists are devising machines that are designed to do no harm.

“We now have a new species, really, of inherently safe robots that can work right alongside people,” MIT roboticist Julie Shah told the PBS NewsHour. “They can bump into you, and not permanently harm you in any way. That’s a game-changer.”

And companies are rolling out their own technology slowly, keeping a number of safety checks in place.

Last week, Waymo launched its much-anticipated self-driving ride-hailing program, dubbed Waymo One, to a limited group of customers in Arizona. The cars feature “safety drivers” positioned in the front seat to take over in case the car gets confused.

It may also provide some needed psychological relief as more people try the cars for the first time, Greenberg said.

“We’re not used to seeing cars drive themselves,” he said. “If we see a car driving itself, our thoughts may immediately go to questions about safety, triggering a danger response.”

ncG1vNJzZmivp6x7sa7SZ6arn1%2Bjsri%2Fx6isq2eVmLyvu8yyZqaZm567qHnSnqWsnV%2BnvKO706xkmqqVYsGpsYyfrK2topp6orrDZq6eZZSku7V506usrKxdqbWmuQ%3D%3D